CDO User Guide

Climate Data Operator

Version 2.5.4

Nov 2025

Uwe Schulzweida – MPI for Meteorology

Contents

1.1 Installation

1.1.1 Unix

1.1.2 MacOS

1.1.3 Windows

1.2 Usage

1.2.1 Options

1.2.2 Environment variables

1.2.3 Operators

1.2.4 Parallelized operators

1.2.5 Operator parameter

1.2.6 Operator chaining

1.2.7 Chaining Benefits

1.3 Advanced Usage

1.3.1 Wildcards

1.3.2 Argument Groups

1.3.3 Applying a operator or chain to multiple inputs

1.3.4 Apply with [:] notation

1.3.5 Apply Keyword (LEGACY)

1.4 Memory Requirements

1.5 Horizontal grids

1.5.1 Grid area weights

1.5.2 Grid description

1.5.3 ICON - Grid File Server

1.6 Z-axis description

1.7 Time axis

1.7.1 Absolute time

1.7.2 Relative time

1.7.3 Conversion of the time

1.8 Parameter table

1.9 Missing values

1.9.1 Mean and average

1.10 Percentile

1.10.1 Percentile over timesteps

1.11 Regions

2 Reference manual

2.1 Information

2.1.1 INFO - Information and simple statistics

2.1.2 SINFO - Short information

2.1.3 XSINFO - Extra short information

2.1.4 DIFF - Compare two datasets field by field

2.1.5 NINFO - Print the number of parameters, levels or times

2.1.6 SHOWINFO - Show variable information

2.1.7 SHOWATTRIBUTE - Show attributes

2.1.8 FILEDES - Dataset description

2.2 File operations

2.2.1 APPLY - Apply operators

2.2.2 COPY - Copy datasets

2.2.3 TEE - Duplicate a data stream and write it to file

2.2.4 PACK - Pack data

2.2.5 UNPACK - Unpack data

2.2.6 SETCHUNKSPEC - Specify chunking

2.2.7 SETFILTER - Specify filter

2.2.8 BITROUNDING - Bit rounding

2.2.9 REPLACE - Replace variables

2.2.10 DUPLICATE - Duplicates a dataset

2.2.11 MERGEGRID - Merge grid

2.2.12 MERGE - Merge datasets

2.2.13 SPLIT - Split a dataset

2.2.14 SPLITTIME - Split timesteps of a dataset

2.2.15 SPLITSEL - Split selected timesteps

2.2.16 SPLITDATE - Splits a file into dates

2.2.17 DISTGRID - Distribute horizontal grid

2.2.18 COLLGRID - Collect horizontal grid

2.3 Selection

2.3.1 SELECT - Select fields

2.3.2 SELMULTI - Select multiple fields via GRIB1 parameters

2.3.3 SELVAR - Select fields

2.3.4 SELTIME - Select timesteps

2.3.5 SELBOX - Select a box

2.3.6 SELREGION - Select horizontal regions

2.3.7 SELGRIDCELL - Select grid cells

2.3.8 SAMPLEGRID - Resample grid

2.3.9 SELYEARIDX - Select year by index

2.3.10 SELTIMEIDX - Select timestep by index

2.3.11 SELSURFACE - Extract surface

2.4 Conditional selection

2.4.1 COND - Conditional select one field

2.4.2 COND2 - Conditional select two fields

2.4.3 CONDC - Conditional select a constant

2.4.4 MAPREDUCE - Reduce fields to user-defined mask

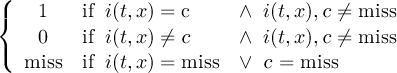

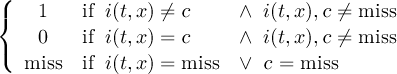

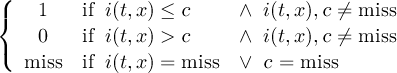

2.5 Comparison

2.5.1 COMP - Comparison of two fields

2.5.2 COMPC - Comparison of a field with a constant

2.5.3 YMONCOMP - Multi-year monthly comparison

2.5.4 YSEASCOMP - Multi-year seasonal comparison

2.6 Modification

2.6.1 SETATTRIBUTE - Set attributes

2.6.2 SETPARTAB - Set parameter table

2.6.3 SET - Set field info

2.6.4 SETTIME - Set time

2.6.5 CHANGE - Change field header

2.6.6 SETGRID - Set grid information

2.6.7 SETZAXIS - Set z-axis information

2.6.8 INVERT - Invert latitudes

2.6.9 INVERTLEV - Invert levels

2.6.10 SHIFTXY - Shift field

2.6.11 MASKREGION - Mask regions

2.6.12 MASKBOX - Mask a box

2.6.13 SETBOX - Set a box to constant

2.6.14 ENLARGE - Enlarge fields

2.6.15 SETMISS - Set missing value

2.6.16 VERTFILLMISS - Vertical filling of missing values

2.6.17 TIMFILLMISS - Temporal filling of missing values

2.6.18 SETGRIDCELL - Set the value of a grid cell

2.7 Arithmetic

2.7.1 EXPR - Evaluate expressions

2.7.2 MATH - Mathematical functions

2.7.3 ARITHC - Arithmetic with a constant

2.7.4 ARITH - Arithmetic on two datasets

2.7.5 DAYARITH - Daily arithmetic

2.7.6 MONARITH - Monthly arithmetic

2.7.7 YEARARITH - Yearly arithmetic

2.7.8 YHOURARITH - Multi-year hourly arithmetic

2.7.9 YDAYARITH - Multi-year daily arithmetic

2.7.10 YMONARITH - Multi-year monthly arithmetic

2.7.11 YSEASARITH - Multi-year seasonal arithmetic

2.7.12 ARITHDAYS - Arithmetic with days

2.7.13 ARITHLAT - Arithmetic with latitude

2.8 Statistical values

2.8.1 TIMCUMSUM - Cumulative sum over all timesteps

2.8.2 CONSECSTAT - Consecute timestep periods

2.8.3 VARSSTAT - Statistical values over all variables

2.8.4 ENSSTAT - Statistical values over an ensemble

2.8.5 ENSSTAT2 - Statistical values over an ensemble

2.8.6 ENSVAL - Ensemble validation tools

2.8.7 FLDSTAT - Statistical values over a field

2.8.8 ZONSTAT - Zonal statistics

2.8.9 MERSTAT - Meridional statistics

2.8.10 GRIDBOXSTAT - Statistical values over grid boxes

2.8.11 REMAPSTAT - Remaps source points to target cells

2.8.12 VERTSTAT - Vertical statistics

2.8.13 TIMSELSTAT - Time range statistics

2.8.14 TIMSELPCTL - Time range percentile values

2.8.15 RUNSTAT - Running statistics

2.8.16 RUNPCTL - Running percentile values

2.8.17 TIMSTAT - Statistical values over all timesteps

2.8.18 TIMPCTL - Percentile values over all timesteps

2.8.19 HOURSTAT - Hourly statistics

2.8.20 HOURPCTL - Hourly percentile values

2.8.21 DAYSTAT - Daily statistics

2.8.22 DAYPCTL - Daily percentile values

2.8.23 MONSTAT - Monthly statistics

2.8.24 MONPCTL - Monthly percentile values

2.8.25 YEARMONSTAT - Yearly mean from monthly data

2.8.26 YEARSTAT - Yearly statistics

2.8.27 YEARPCTL - Yearly percentile values

2.8.28 SEASSTAT - Seasonal statistics

2.8.29 SEASPCTL - Seasonal percentile values

2.8.30 YHOURSTAT - Multi-year hourly statistics

2.8.31 DHOURSTAT - Multi-day hourly statistics

2.8.32 DMINUTESTAT - Multi-day by the minute statistics

2.8.33 YDAYSTAT - Multi-year daily statistics

2.8.34 YDAYPCTL - Multi-year daily percentile values

2.8.35 YMONSTAT - Multi-year monthly statistics

2.8.36 YMONPCTL - Multi-year monthly percentile values

2.8.37 YSEASSTAT - Multi-year seasonal statistics

2.8.38 YSEASPCTL - Multi-year seasonal percentile values

2.8.39 YDRUNSTAT - Multi-year daily running statistics

2.8.40 YDRUNPCTL - Multi-year daily running percentile values

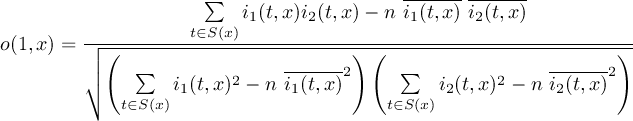

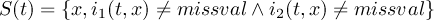

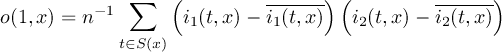

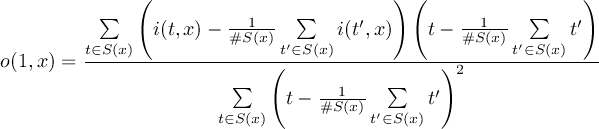

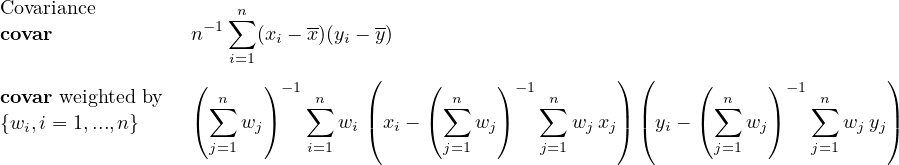

2.9 Correlation and co.

2.9.1 FLDCOR - Correlation in grid space

2.9.2 TIMCOR - Correlation over time

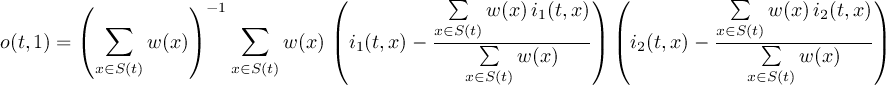

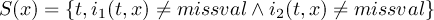

2.9.3 FLDCOVAR - Covariance in grid space

2.9.4 TIMCOVAR - Covariance over time

2.10 Regression

2.10.1 REGRES - Regression

2.10.2 DETREND - Detrend time series

2.10.3 TREND - Trend of time series

2.10.4 TRENDARITH - Add or subtract a trend

2.11 Eof

2.11.1 EOF - Empirical Orthogonal Functions

2.11.2 EOFCOEFF - Principal coefficients of EOFs

2.12 Interpolation

2.12.1 REMAPBIL - Bilinear interpolation

2.12.2 REMAPBIC - Bicubic interpolation

2.12.3 REMAPNN - Nearest neighbor remapping

2.12.4 REMAPDIS - Distance weighted average remapping

2.12.5 REMAPCON - First order conservative remapping

2.12.6 REMAPLAF - Largest area fraction remapping

2.12.7 REMAP - Grid remapping

2.12.8 REMAPETA - Remap vertical hybrid level

2.12.9 VERTINTML - Vertical interpolation

2.12.10 VERTINTAP - Vertical pressure interpolation

2.12.11 VERTINTGH - Vertical height interpolation

2.12.12 INTLEVEL - Linear level interpolation

2.12.13 INTLEVEL3D - Linear level interpolation from/to 3D vertical coordinates

2.12.14 INTTIME - Time interpolation

2.12.15 INTYEAR - Year interpolation

2.13 Transformation

2.13.1 SPECTRAL - Spectral transformation

2.13.2 SPECCONV - Spectral conversion

2.13.3 WIND2 - D and V to velocity potential and stream function

2.13.4 WIND - Wind transformation

2.13.5 FOURIER - Fourier transformation

2.14 Import/Export

2.14.1 IMPORTBINARY - Import binary data sets

2.14.2 IMPORTCMSAF - Import CM-SAF HDF5 files

2.14.3 INPUT - Formatted input

2.14.4 OUTPUT - Formatted output

2.14.5 OUTPUTTAB - Table output

2.14.6 OUTPUTGMT - GMT output

2.15 Miscellaneous

2.15.1 GRADSDES - GrADS data descriptor file

2.15.2 AFTERBURNER - ECHAM standard post processor

2.15.3 FILTER - Time series filtering

2.15.4 GRIDCELL - Grid cell quantities

2.15.5 SMOOTH - Smooth grid points

2.15.6 DELTAT - Difference between timesteps

2.15.7 REPLACEVALUES - Replace variable values

2.15.8 GETGRIDCELL - Get grid cell index

2.15.9 VARGEN - Generate a field

2.15.10 TIMSORT - Timsort

2.15.11 WINDTRANS - Wind Transformation

2.15.12 ROTUV - Rotation

2.15.13 MROTUVB - Backward rotation of MPIOM data

2.15.14 MASTRFU - Mass stream function

2.15.15 PRESSURE - Pressure on model levels

2.15.16 DERIVEPAR - Derived model parameters

2.15.17 ADISIT - Potential temperature to in-situ temperature and vice versa

2.15.18 RHOPOT - Calculates potential density

2.15.19 HISTOGRAM - Histogram

2.15.20 SETHALO - Set the bounds of a field

2.15.21 WCT - Windchill temperature

2.15.22 FDNS - Frost days where no snow index per time period

2.15.23 STRWIN - Strong wind days index per time period

2.15.24 STRBRE - Strong breeze days index per time period

2.15.25 STRGAL - Strong gale days index per time period

2.15.26 HURR - Hurricane days index per time period

2.15.27 CMORLITE - CMOR lite

2.15.28 VERIFYGRID - Verify grid coordinates

2.15.29 HEALPIX - Change healpix resolution

3 Contributors

3.1 History

3.2 External sources

3.3 Contributors

A Environment Variables

B Parallelized operators

C Standard name table

D Grid description examples

D.1 Example of a curvilinear grid description

D.2 Example description for an unstructured grid

Operator catalog

Operator list

1 Introduction

The Climate Data Operator (CDO) software is a collection of many operators for standard processing of climate and forecast model data. The operators include simple statistical and arithmetic functions, data selection and subsampling tools, and spatial interpolation. CDO was developed to have the same set of processing functions for GRIB [GRIB] and NetCDF [NetCDF] datasets in one package.

The Climate Data Interface [CDI] is used for the fast and file format independent access to GRIB and NetCDF datasets. The local MPI-MET data formats SERVICE, EXTRA and IEG are also supported.

There are some limitations for GRIB and NetCDF datasets:

- GRIB

-

datasets have to be consistent, similar to NetCDF. That means all time steps need to have the same variables, and within a time step each variable may occur only once. Multiple fields in single GRIB2 messages are not supported!

- NetCDF

-

datasets are only supported for the classic data model and arrays up to 4 dimensions. These dimensions should only be used by the horizontal and vertical grid and the time. The NetCDF attributes should follow the GDT, COARDS or CF Conventions.

The main CDO features are:

-

More than 700 operators available

-

Modular design and easily extendable with new operators

-

Very simple UNIX command line interface

-

A dataset can be processed by several operators, without storing the interim results in files

-

Most operators handle datasets with missing values

-

Fast processing of large datasets

-

Support of many different grid types

-

Tested on many UNIX/Linux systems, Cygwin, and MacOS-X

Latest pdf documentation be found here.

1.1 Installation

CDO is supported in different operative systems such as Unix, macOS and Windows. This section describes how to install CDO in those platforms. More examples are found on the main website ( https://code.mpimet.mpg.de/projects/cdo/wiki)

1.1.1 Unix

1.1.1.1. Prebuilt CDO packages

Prebuilt CDO versions are available in online Unix repositories, and you can install them by typing on the Unix terminal

apt-get install cdo

Note that prebuilt libraries do not offer the most recent version, and their version might vary with the Unix

system (see table below). It is recommended to build from the source or Conda environment for an updated

version or a customised setting.

| Unix OS | CDO Version | |

| 11 (Bullseye) | 1.9.10-1 | |

| 10 (Buster) | 1.9.6-1 | |

|

Debian | Sid | 2.0.6-2 |

| 13 | 2.0.6 | |

|

FreeBSD | 12 | 2.0.6 |

| Leap 15.3 | 2.0.6 | |

|

openSUSE | Tumbleweed | 2.0.6-1 |

| 18.04 LTS | 1.9.3 | |

| 20.04 LTS | 1.9.9 | |

|

Ubuntu | 22.04 LTS | 2.0.4-1 |

1.1.1.2. Building from sources

CDO uses the GNU configure and build system for compilation. The only requirement is a working ISO C++20 and C11 compiler.

First go to the download page (https://code.mpimet.mpg.de/projects/cdo) to get the latest distribution, if you do not have it yet.

To take full advantage of CDO features the following additional libraries should be installed:

-

Unidata NetCDF library (https://www.unidata.ucar.edu/software/netcdf) version 4.3.3 or higher.

This library is needed to process NetCDF [NetCDF] files with CDO. -

ECMWF ecCodes library (https://software.ecmwf.int/wiki/display/ECC/ecCodes+Home) version 2.3.0 or higher. This library is needed to process GRIB2 files with CDO.

-

HDF5 szip library (https://www.hdfgroup.org/doc_resource/SZIP) version 2.1 or higher.

This library is needed to process szip compressed GRIB [GRIB] files with CDO. -

HDF5 library (https://www.hdfgroup.org) version 1.6 or higher.

This library is needed to import CM-SAF [CM-SAF] HDF5 files with the CDO operator import_cmsaf. -

PROJ library (https://proj.org) version 5.0 or higher.

This library is needed to convert Sinusoidal and Lambert Azimuthal Equal Area coordinates to geographic coordinates, for e.g. remapping. -

Magics library (https://software.ecmwf.int/wiki/display/MAGP/Magics) version 2.18 or higher.

This library is needed to create contour, vector and graph plots with CDO.

Compilation

Compilation is done by performing the following steps:

-

Unpack the archive, if you haven’t done that yet:

gunzip cdo-$VERSION.tar.gz # uncompress the archive tar xf cdo-$VERSION.tar # unpack it cd cdo-$VERSION -

Run the configure script:

./configure-

Optionaly with NetCDF [NetCDF] support:

./configure --with-netcdf=<NetCDF root directory> -

and with ecCodes:

./configure --with-eccodes=<ecCodes root directory>

For an overview of other configuration options use

./configure --help -

-

Compile the program by running make:

make

The program should compile without problems and the binary (cdo) should be available in the src directory of the distribution.

Installation

After the compilation of the source code do a make install, possibly as root if the destination permissions require that.

make install

The binary is installed into the directory <prefix>/bin. <prefix> defaults to /usr/local but can be changed with the --prefix option of the configure script.

Alternatively, you can also copy the binary from the src directory manually to some bin directory in your search path.

1.1.1.3. Conda

Conda is an open-source package manager and environment management system for various languages (Python, R, etc.). Conda is installed via Anaconda or Miniconda. Unlike Anaconda, miniconda is a lightweight conda distribution. They can be dowloaded from the main conda Website (https://conda.io/projects/conda/en/latest/user-guide/install/linux.html) or on the terminal

wget https://repo.anaconda.com/archive/Anaconda3-2021.11-Linux-x86_64.sh bash Anaconda3-2021.11-Linux-x86_64.sh source ~/.bashrc

and

wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh sh Miniconda3-latest-Linux-x86_64.sh

Upon setting your conda environment, you can install CDO using conda

conda install cdo conda install python-cdo

1.1.2 MacOS

Among the MacOS package managers, CDO can be installed from Homebrew and Macports. The installation via Homebrew is straight forward process on the terminal

brew install cdo

Similarly, Macports

port install cdo

In contrast to Homebrew, Macport allows you to enable GRIB2, szip compression and Magics++ graphic in CDO installation.

port install cdo +grib_api +magicspp +szip

In addition, you could also set CDO via Conda as Unix. You can follow this tutorial to install anaconda or miniconda in your computer (https://conda.io/projects/conda/en/latest/user-guide/install/macos.html). Then, you can install cdo by

conda install -c conda-forge cdo

1.1.3 Windows

Currently, CDO is not supported in Windows system and the binary is not available in the windows conda

repository. Therefore, CDO needs to be set in a virtual environment. Here, it covers the installation of CDO using

Windows Subsystem Linux (WSL) and virtual machines.

1.1.3.1. WSL

WSL emulates Unix in your Windows system. Then, you can install Unix libraries and software such as CDO or the linux conda distribution in your computer. Also, it allows you to directly share your files between your Windows and the WSL environment. However, more complex functions that require a graphic interface are not allowed.

In Windows 10 or newer, WSL can be readily set in your cmd by typing

wsl --install

This command will install, by default, Ubuntu 20.04 in WSL2. You could also choose a different system from this list.

wsl -l -o

Then, you can install your WSL environment as

wsl --install -d NAME

1.1.3.2. Virtual machine

Virtual machines can emulate different operative systems in your computer. Virtual machines are guest

computers mounted inside your host computer. You can set a Linux distribution in your Windows

device in this particular case. The advantages of Virtual machines to WSL are the graphical interface

and the fully operational Linux system. You can follow any tutorial on the internet such as this one

Finally, you can install CDO following any method listed in the section 1.1.1.

1.2 Usage

This section descibes how to use CDO. The syntax is:

cdo [ Options ] Operator1 [ -Operator2 [ -OperatorN ] ]

1.2.1 Options

All options have to be placed before the first operator. The following options are available for all operators:

| -a | Generate an absolute time axis. |

| -b <nbits> | Set the number of bits for the output precision. The valid precisions depend |

| on the file format: |

|

| For srv, ext and ieg format the letter L or B can be added to set the byteorder |

| to Little or Big endian. |

| --cmor | CMOR conform NetCDF output. |

| -C, --color | Colorized output messages. |

| --double | Using double precision floats for data in memory. |

| --eccodes | Use ecCodes to decode/encode GRIB1 messages. |

| --filter <filterspec> |

| NetCDF4 filter specification. |

| -f <format> | Set the output file format. The valid file formats are: |

|

| GRIB2 is only available if CDO was compiled with ecCodes support and all |

| NetCDF file types are only available if CDO was compiled with NetCDF support! |

| -g <grid> | Define the default grid description by name or from file (see chapter 1.3 on page 87). |

| Available grid names are: global_<DXY>, zonal_<DY>, r<NX>x<NY>, lon=<LON>/lat=<LAT>, |

| F<N>, gme<NI>, hpz<ZOOM> |

| -h, --help | Help information for the operators. |

| --no_history | Do not append to NetCDF history global attribute. |

| --netcdf_hdr_pad, --hdr_pad, --header_pad <nbr> |

| Pad NetCDF output header with nbr bytes. |

| -k <chunktype> | NetCDF4 chunk type: auto, grid or lines. |

| -L | Lock I/O (sequential access). |

| -m <missval> | Set the missing value of non NetCDF files (default: -9e+33). |

| -O | Overwrite existing output file, if checked. |

| Existing output file is checked only for: ens<STAT>, merge, mergetime |

| --operators | List of all operators. |

| -P <nthreads> | Set number of OpenMP threads (Only available if OpenMP support was compiled in). |

| --pedantic | Warnings count as errors. |

| --percentile <method> |

| Methods: nrank, nist, rtype8, <NumPy method (linear|lower|higher|nearest|...)> |

| --reduce_dim | Reduce NetCDF dimensions. |

| -R, --regular | Convert GRIB1 data from global reduced to regular Gaussian grid (only with cgribex lib). |

| -r | Generate a relative time axis. |

| -S | Create an extra output stream for the module TIMSTAT. This stream contains |

| the number of non missing values for each output period. |

| -s, --silent | Silent mode. |

| --shuffle | Specify shuffling of variable data bytes before compression (NetCDF). |

| --single | Using single precision floats for data in memory. |

| --sortname | Alphanumeric sorting of NetCDF parameter names. |

| -t <partab> | Set the GRIB1 (cgribex) default parameter table name or file (see chapter 1.6 on page 94). |

| Predefined tables are: echam4 echam5 echam6 mpiom1 ecmwf remo |

| --timestat_date <srcdate> |

| Target timestamp (temporal statistics): first, middle, midhigh or last source timestep. |

| -V, --version | Print the version number. |

| -v, --verbose | Print extra details for some operators. |

| -w | Disable warning messages. |

| --worker <num> | Number of worker to decode/decompress GRIB records. |

| -z aec | AEC compression of GRIB1 records. |

| jpeg | JPEG compression of GRIB2 records. |

| zip[_1-9] | Deflate compression of NetCDF4 variables. |

| zstd[_1-19] | Zstandard compression of NetCDF4 variables. |

1.2.2 Environment variables

There are some environment variables which influence the behavior of CDO. An incomplete list can be found in Appendix A.

Here is an example to set the environment variable CDO_RESET_HISTORY for different shells:

| Bourne shell (sh): | CDO_RESET_HISTORY=1 ; export CDO_RESET_HISTORY |

| Korn shell (ksh): | export CDO_RESET_HISTORY=1 |

| C shell (csh): | setenv CDO_RESET_HISTORY 1 |

1.2.3 Operators

There are more than 700 operators available. A detailed description of all operators can be found in the Reference Manual section.

1.2.4 Parallelized operators

Some of the CDO operators are shared memory parallelized with OpenMP. An OpenMP-enabled C compiler is needed to use this feature. Users may request a specific number of OpenMP threads nthreads with the ’ -P’ switch.

Here is an example to distribute the bilinear interpolation on 8 OpenMP threads:

cdo -P 8 remapbil,targetgrid infile outfile

Many CDO operators are I/O-bound. This means most of the time is spend in reading and writing the data. Only compute intensive CDO operators are parallelized. An incomplete list of OpenMP parallelized operators can be found in Appendix B.

1.2.5 Operator parameter

Some operators need one or more parameter. A list of parameter is indicated by the seperator ’,’.

-

STRING

String parameters require quotes if the string contains blanks or other characters interpreted by the shell. The following command select variables with the name pressure and tsurf:

cdo selvar,pressure,tsurf infile outfile -

FLOAT

Floating point number in any representation. The following command sets the range between 0 and 273.15 of all fields to missing value:

cdo setrtomiss,0,273.15 infile outfile -

BOOL

Boolean parameter in the following representation TRUE/FALSE, T/F or 0/1. To disable the weighting by grid cell area in the calculation of a field mean, use:

cdo fldmean,weights=FALSE infile outfile -

INTEGER

A range of integer parameter can be specified by first/last[/inc]. To select the days 5, 6, 7, 8 and 9 use:

cdo selday,5/9 infile outfileThe result is the same as:

cdo selday,5,6,7,8,9 infile outfile

1.2.6 Operator chaining

Operator chaining allows to combine two or more operators on the command line into a single CDO call. This allows the creation of complex operations out of more simple ones: reductions over several dimensions, file merges and all kinds of analysis processes. All operators with a fixed number of input streams and one output stream can pass the result directly to an other operator. For differentiation between files and operators all operators must be written with a prepended "-" when chaining.

cdo -monmean -add -mulc,2.0 infile1 -daymean infile2 outfile (CDO example call)

Here monmean will have the output of add while add takes the output of mulc,2.0 and daymean. infile1 and infile2 are inputs for their predecessor. When mixing operators with an arbitrary number of input streams extra care needs to be taken. The following examples illustrates why.

-

cdo info -timavg infile1 infile2

-

cdo info -timavg infile?

-

cdo timavg infile1 tmpfile

cdo info tmpfile infile2

rm tmpfile

All three examples produce identical results. The time average will be computed only on the first input

file.

Note(1): In section 1.3.2 we introduce argument groups which will make this a lot easier and less error prone.

Note(2): Operator chaining is implemented over POSIX Threads (pthreads). Therefore this CDO feature is not

available on operating systems without POSIX Threads support!

1.2.7 Chaining Benefits

Combining operators can have several benefits. The most obvious is a performance increase through reducing disk I/O:

cdo sub -dayavg infile2 -timavg infile1 outfile

instead of

cdo timavg infile1 tmp1 cdo dayavg infile2 tmp2 cdo sub tmp2 tmp1 outfile rm tmp1 tmp2

Especially with large input files the reading and writing of intermediate files can have a big influence on the

overall performance.

A second aspect is the execution of operators: Limited by the algorythms potentially all operators of a chain can

run in parallel.

1.3 Advanced Usage

In this section we will introduce advanced features of CDO. These include operator grouping which allows to write more complex CDO calls and the apply keyword which allows to shorten calls that need an operator to be executed on multiple files as well as wildcards which allow to search paths for file signatures. These features have several restrictions and follow rules that depend on the input/output properties. These required properties of operators can be investigated with the following commands which will output a list of operators that have selected properties:

cdo --attribs [arbitrary/filesOnly/onlyFirst/noOutput/obase]

-

arbitrary describes all operators where the number of inputs is not defined.

-

filesOnly are operators that can have other operators as input.

-

onlyFirst shows which operators can only be at the most left position of the polish notation argument chain.

-

noOutput are all operators that do not print to any file (e.g info)

-

obase Here obase describes an operator that does not use the output argument as file but e.g as a file name base (output base). This is almost exclusivly used for operators the split input files.

cdo -splithour baseName_ could result in: baseName_1 baseName_2 ... baseName_N

For checking a single or multiple operator directly the following usage of --attribs can be used:

cdo --attribs operatorName

1.3.1 Wildcards

Wildcards are a standard feature of command line interpreters (shells) on many operating systems. They are

placeholder characters used in file paths that are expanded by the interpreter into file lists. For further information

the Advance Bash Scripting Guide is a valuable source of information. Handling of input is a central issue for

CDO and in some circumstances it is not enough to use the wildcards from the shell. That’s why CDO can handle

them on its own.

| all files | 2020-2-01.txt 2020-2-11.txt 2020-2-15.txt 2020-3-01.txt 2020-3-02.txt |

| 2020-3-12.txt 2020-3-13.txt 2020-3-15.txt 2021.grb 2022.grb | |

| wildcard | filelist results |

| 2020-3* and 2020-3-??.txt | 2020-3-01.txt 2020-3-02.txt 2020-3-12.txt 2020-3-13.txt 2020-3-15.txt |

| 2020-3-?1.txt | 2020-3-01.txt |

| *.grb | 2021.grb 2020.grb |

Use single quotes if the input stream names matched to a single wildcard expression. In this case CDO will do the pattern matching and the output can be combined with other operators. Here is an example for this feature:

cdo timavg -select,name=temperature ’infile?’ outfile

In earlier versions of CDO this was necessary to have the right files parsed to the right operator. Newer

version support this with the argument grouping feature (see 1.3.2). We advice the use of the grouping

mechanism instead of the single quoted wildcards since this feature could be deprecated in future versions.

Note: Wildcard expansion is not available on operating systems without the glob() function!

1.3.2 Argument Groups

In section 1.2.6 we described that it is not possible to chain operators with an arbitrary number of inputs. In this

section we want to show how this can be achieved through the use of operator grouping with angled brackets [].

Using these brackets CDO can assigned the inputs to their corresponding operators during the execution of the

command line. The ability to write operator combination in a parenthis-free way is partly given up in favor of

allowing operators with arbitrary number of inputs. This allows a much more compact way to handle large number

of input files.

The following example shows an example which we will transform from a non-working solution to a working

one.

cdo -infon -div -fldmean -cat infileA -mulc,2.0 infileB -fldmax infileC

This example will throw the following error:

cdo (Abort): -infon -div -fldmean -cat infileA -mulc,2.0 infileB -fldmax infileC ^ Operator cannot be assigned. Reason: Multiple variable input operators used. Use subgroups via [ ] to clarify relations (help: --argument_groups).

The error is raised by the operator div. This operator needs two input streams and one output stream, but the cat operator has claimed all possible streams on its right hand side as input because it accepts an arbitrary number of inputs. Hence it didn’t leave anything for the remaining input or output streams of div. For this we can declare a group which will be passed to the operator left of the group.

cdo -infon -div -fldmean -cat [ infileA -mulc,2.0 infileB ] -fldmax infileC

For full flexibility it is possible to have groups inside groups:

cdo -infon -div -fldmean -cat [ infileA infileB -merge [ infileC1 infileC2 ] ] -fldmax infileD

1.3.3 Applying a operator or chain to multiple inputs

When working with medium or large number of similar files there is a common problem of a processing step (often a reduction) which needs to be performed on all of them before a more specific analysis can be applied. Usually this can be done in two ways: One option is to use a merge operator to glue everything together and chain the reduction step after it. The second option is to write a for-loop over all inputs which perform the basic processing on each of the files separately and call a merge operator one the results. Unfortunately both options have side-effects: The first one needs a lot of memory because all files are read in completely and reduced afterwards while the latter one creates a lot of temporary files. Both memory and disk IO can be bottlenecks and should be avoided. In CDO there exist two approaches to circumvent most drawbacks. The first is to use the more recent ’apply’ feature using the [ to_be_applied : applied_to ] syntax, the second is an older approach and is only listed and documented for completeness sake. We highly recommend the more recent approach!

1.3.4 Apply with [:] notation

With the [ to_be_applied : applied_to ] syntax it is possible to prepend multiple inputs or chains with another chain. For example:

-mergetime [ -selname,tsurf : *.grb ]

would merge all grib files in the folder after selecting the variable tsurf from them.

In general the to_be_applied is applied in parallel to all related input streams (applied_to) before all streams are

passed to operator next in the chain.

The following is an example with three input files:

cdo -mergetime [ -selname,tsurf : infile1 infile2 infile3 ] outfile

This would result in CDO executing as if the following was used:

cdo -mergetime -selname,tsurf infile1 -selname,tsurf infile2 -selname,tsurf infile3 outfile

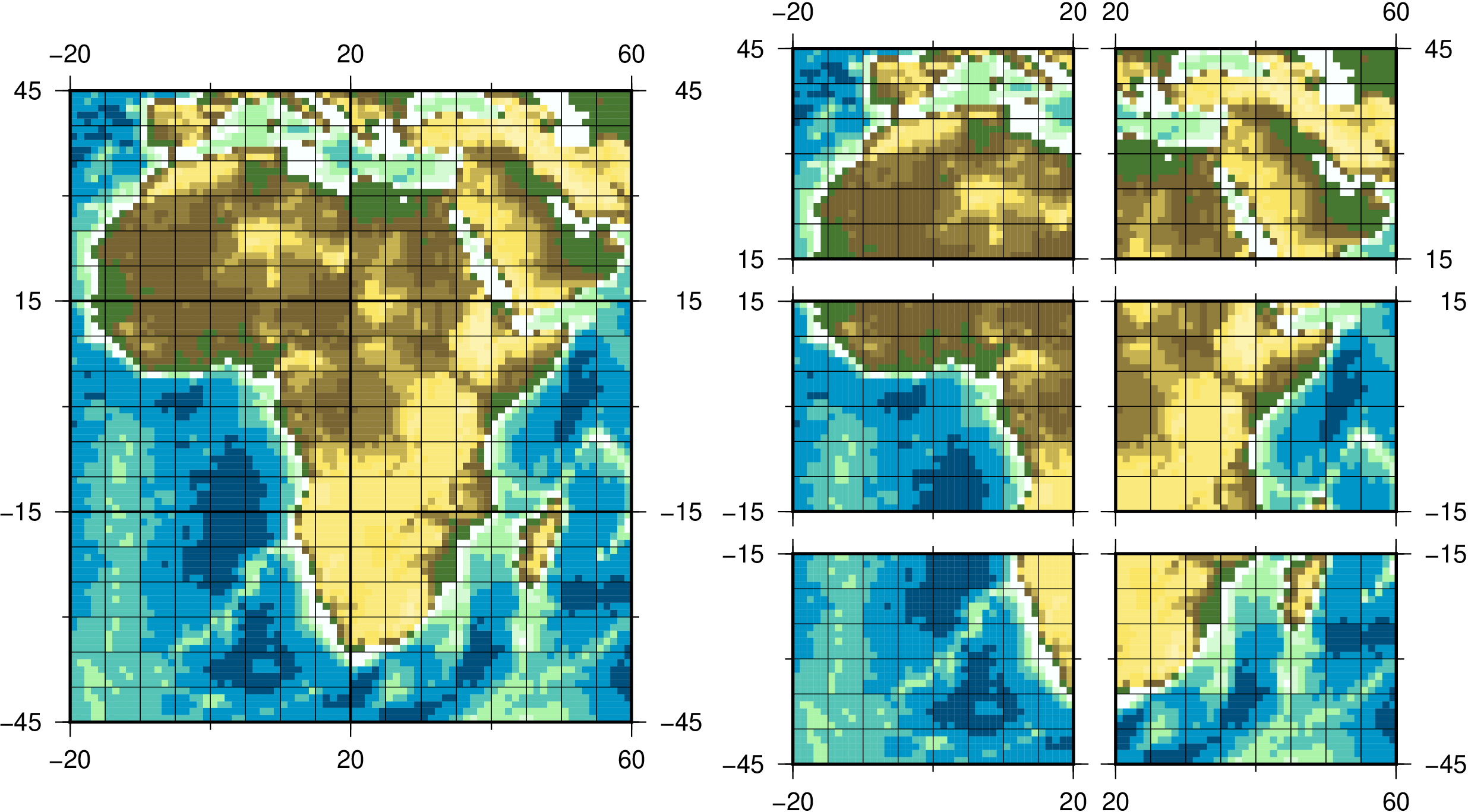

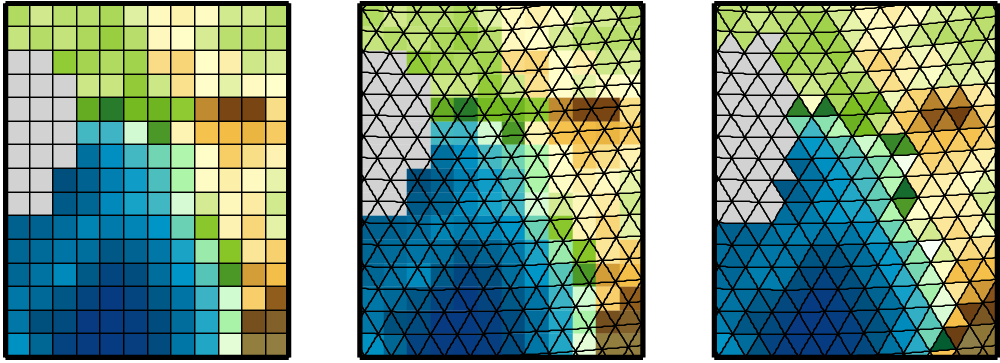

| Figure 1.1.: | Usage and result of [:] notation |

This notation is especially useful when combined with wildcards. The previous example can be shortened further.

cdo -mergetime [ -selname,tsurf : infile? ] outfile

As shown this feature allows to simplify commands with medium amount of files and to move reductions further back. This can also have a positive impact on the performance.

An example where performance can take a hit.

cdo -yearmean -selname,tsurf -mergetime [ f1 ... f40 ]

An improved but ugly to write example.

cdo -yearmean -mergetime [ -selname,tsurf f1 -selname,tsurf f2 ... -selname,tsurf f40 ]

Apply saves the day. And creates the call above with much less typing.

cdo -yearmean -mergetime [ -selname,tsurf : f1 ... f40 ]

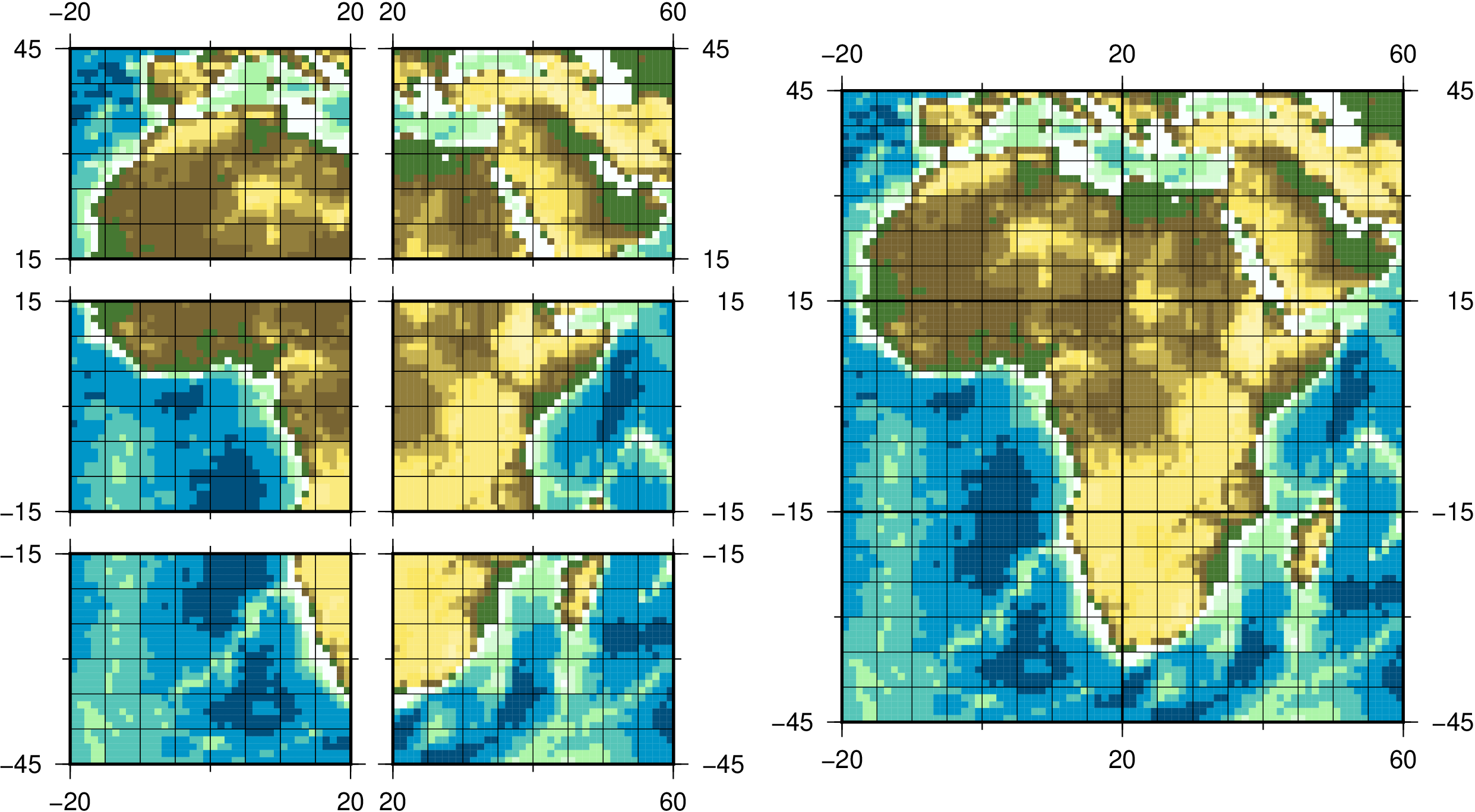

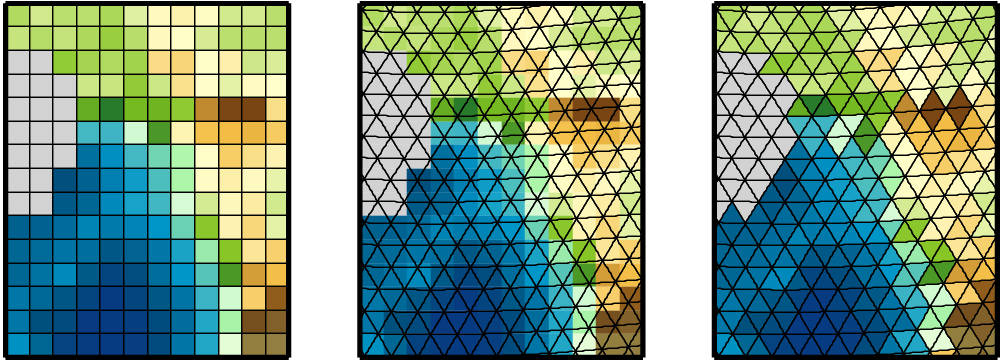

| Figure 1.2.: | [ : ] notation simplifies command and execution |

Further this notation allows to prepend full cdo chains with other operators:

cdo -info -mergetime [ -selname,tsurf : -addc,1 -mul f1 f2 -addc,4 f3 ]

Resolving internally to the following command:

info -mergetime -selname,tsurf [ -addc,1 -mul f1 f2 -selvar,tsurf -addc,4 f3 ]

1.3.5 Apply Keyword (LEGACY)

Originally the apply keyword was introduced for that purpose. We want to repeat that this is an older method and

should not be used in newly written CDO commands! It can be used as an operator, but it needs at least one

operator as a parameter, which is applied in parallel to all related input streams in a parallel way before all

streams are passed to operator next in the chain.

The following is an example with three input files:

cdo -mergetime -apply,-selname,tsurf [ infile1 infile2 infile3 ] outfile

would result in:

cdo -mergetime -selname,tsurf infile1 -selname,tsurf infile2 -selname,tsurf infile3 outfile

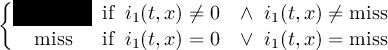

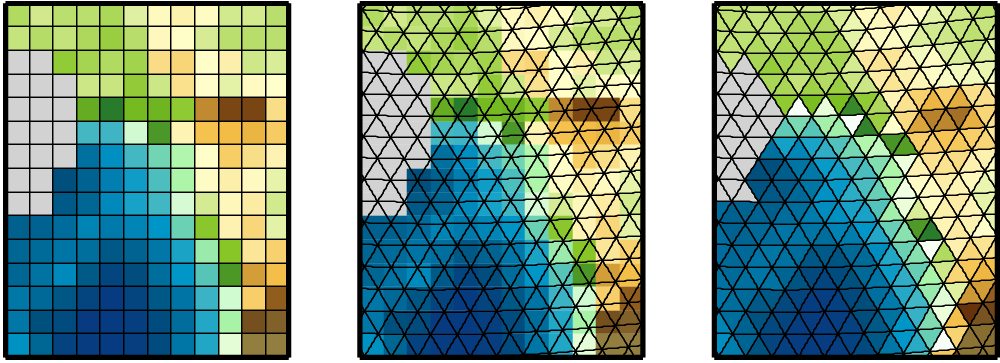

| Figure 1.3.: | Usage and result of apply keyword |

Apply is especially useful when combined with wildcards. The previous example can be shortened further.

cdo -mergetime -apply,-selname,tsurf [ infile? ] outfile

As shown this feature allows to simplify commands with medium amount of files and to move reductions further back. This can also have a positive impact on the performance.

An example where performance can take a hit.

cdo -yearmean -selname,tsurf -mergetime [ f1 ... f40 ]

An improved but ugly to write example.

cdo -yearmean -mergetime [ -selname,tsurf f1 -selname,tsurf f2 ... -selname,tsurf f40 ]

Apply saves the day. And creates the call above with much less typing.

cdo -yearmean -mergetime [ -apply,-selname,tsurf [ f1 ... f40 ] ]

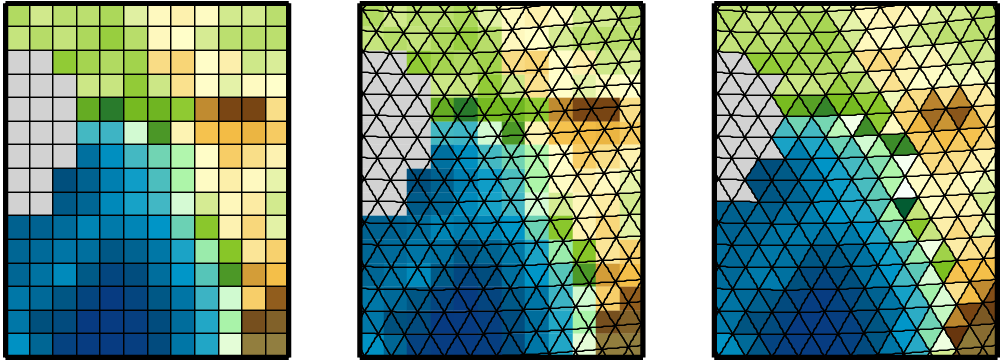

| Figure 1.4.: | Apply keyword simplifies command and execution |

In the example in figure 1.4 the resulting call will dramatically save process interaction as well as execution times since the reduction (selname,tsurf) is applied on the files first. That means that the mergetime operator will receive the reduced files and the operations for merging the whole data is saved. For other CDO calls further improvements can be made by adding more arguments to apply (1.5)

A less performant example.

cdo -aReduction -anotherReduction -selname,tsurf -mergetime [ f1 ... f40 ]

cdo -mergetime -apply,"-aReduction -anotherReduction -selname,tsurf" [ f1 ... f40 ]

| Figure 1.5.: | Multi argument apply |

Restrictions: While the apply keyword can be extremely helpful it has several restrictions (for now!).

-

Apply inputs can only be files, wildcards and operators that have 0 inputs and 1 output.

-

Apply can not be used as the first CDO operator.

-

Apply arguments can only be operators with 1 input and 1 output.

-

Grouping inside the Apply argument or input is not allowed.

1.4 Memory Requirements

This section roughly describes the memory requirements of CDO. CDO tries to use as little memory as possible. The smallest unit that is read by all operators is a horizontal field. The required memory depends mainly on the used operators, the data format, the data type and the size of the fields.

The operators have partly very different memory requirements. Many CDO modules like FLDSTAT process one horizontal field at a time. Memory-intensive modules such as ENSSTAT and TIMSTAT require all fields of a time step to be held in memory. Of course, the memory requirements of each operator add up when they are combined. Some operators are parallelized with OpenMP. In multi-threaded mode (see option -P) the memory requirement can increase for these operators. This increase grows with the number of threads used.

The data type determines the number of bytes per value. Single precision floating point data occupies 4 bytes per value. All other data types are read as double precision floats and thus occupy 8 bytes per value. With the CDO option --single all data is read as single precision floats. This can reduce the memory requirement by a factor of 2.

1.5 Horizontal grids

Physical quantities of climate models are typically stored on a horizonal grid. CDO supports structured grids like regular lon/lat or curvilinear grids and also unstructured grids.

1.5.1 Grid area weights

One single point of a horizontal grid represents the mean of a grid cell. These grid cells are typically of different sizes, because the grid points are of varying distance.

Area weights are individual weights for each grid cell. They are needed to compute the area weighted mean or variance of a set of grid cells (e.g. fldmean - the mean value of all grid cells). In CDO the area weights are derived from the grid cell area. If the cell area is not available then it will be computed from the geographical coordinates via spherical triangles. This is only possible if the geographical coordinates of the grid cell corners are available or derivable. Otherwise CDO gives a warning message and uses constant area weights for all grid cells.

The cell area is read automatically from a NetCDF input file if a variable has the corresponding “cell_measures” attribute, e.g.:

var:cell_measures = "area: cell_area" ;

If the computed cell area is not desired then the CDO operator setgridarea can be used to set or overwrite the grid cell area.

1.5.2 Grid description

In the following situations it is necessary to give a description of a horizontal grid:

-

Changing the grid description (operator: setgrid)

-

Horizontal interpolation (all remapping operators)

-

Generating of variables (operator: const, random)

As now described, there are several possibilities to define a horizontal grid.

1.5.2.1. Predefined grids

Predefined grids are available for global regular, gaussian, HEALPix or icosahedral-hexagonal GME grids.

Global regular grid: global_<DXY>

global_<DXY> defines a global regular lon/lat grid. The grid increment <DXY> can be chosen arbitrarily. The longitudes start at <DXY>/2 - 180∘ and the latitudes start at <DXY>/2 - 90∘.

Regional regular grid: dcw:<CountryCode>[_<DXY>]

dcw:<CountryCode>[_<DXY>] defines a regional regular lon/lat grid from the country code. The default value of the optional grid increment <DXY> is 0.1 degree. The ISO two-letter country codes can be found on https://en.wikipedia.org/wiki/ISO_3166-1_alpha-2. To define a state, append the state code to the country code, e.g. USAK for Alaska. For the coordinates of a country CDO uses the DCW (Digital Chart of the World) dataset from GMT. This dataset must be installed on the system and the environment variable DIR_DCW must point to it.

Zonal latitudes: zonal_<DY>

zonal_<DY> defines a grid with zonal latitudes only. The latitude increment <DY> can be chosen arbitrarily. The latitudes start at <DY>/2 - 90∘. The boundaries of each latitude are also generated. The number of longitudes is 1. A grid description of this type is needed to calculate the zonal mean (zonmean) for data on an unstructured grid.

Global regular grid: r<NX>x<NY>

r<NX>x<NY> defines a global regular lon/lat grid. The number of the longitudes <NX> and the latitudes <NY> can be chosen arbitrarily. The longitudes start at 0∘ with an increment of (360/<NX>)∘. The latitudes go from south to north with an increment of (180/<NY>)∘.

One grid point: lon=<LON>/lat=<LAT>

lon=<LON>/lat=<LAT> defines a lon/lat grid with only one grid point.

Full regular Gaussian grid: F<N>

F<N> defines a global regular Gaussian grid. N specifies the number of latitudes lines between the Pole and the Equator. The total number of latitudes and longitues is: nlat = N * 2; nlon = nlat * 2. The longitudes start at 0∘ with an increment of (360/nlon)∘. The gaussian latitudes go from north to south.

Global icosahedral-hexagonal GME grid: gme<NI>

gme<NI> defines a global icosahedral-hexagonal GME grid. NI specifies the number of intervals on a main triangle side.

HEALPix grid: hp<NSIDE>[_<ORDER>]

HEALPix is an acronym for Hierarchical Equal Area isoLatitude Pixelization of a sphere.

hp<NSIDE>[_<ORDER>] defines the parameter of a global HEALPix grid. The NSIDE parameter controls the

resolution of the pixellization. It is the number of pixels on the side of each of the 12 top-level HEALPix pixels.

The total number of grid pixels is 12*NSIDE*NSIDE. NSIDE=1 generates the 12 (H=4, K=3) equal sized top-level

HEALPix pixels. ORDER sets the index ordering convention of the pixels, available are nested (default) or ring

ordering. A shortcut for hp<NSIDE>_nested is hpz<ZOOM>. ZOOM is the zoom level and the relation to NSIDE is

zoom = log2(nside).

If the geographical coordinates are required in CDO, they are calculated from the HEALPix parameters. For this calculation the astropy-healpix C library is used.

1.5.2.2. Grids from data files

You can use the grid description from an other datafile. The format of the datafile and the grid of the data field must be supported by CDO. Use the operator ’sinfo’ to get short informations about your variables and the grids. If there are more then one grid in the datafile the grid description of the first variable will be used. Add the extension :N to the name of the datafile to select grid number N.

1.5.2.3. SCRIP grids

SCRIP (Spherical Coordinate Remapping and Interpolation Package) uses a common grid description for curvilinear and unstructured grids. For more information about the convention see [SCRIP]. This grid description is stored in NetCDF. Therefor it is only available if CDO was compiled with NetCDF support!

SCRIP grid description example of a curvilinear MPIOM [MPIOM] GROB3 grid (only the NetCDF header):

netcdf grob3s { dimensions: grid_size = 12120 ; grid_corners = 4 ; grid_rank = 2 ; variables: int grid_dims(grid_rank) ; double grid_center_lat(grid_size) ; grid_center_lat:units = "degrees" ; grid_center_lat:bounds = "grid_corner_lat" ; double grid_center_lon(grid_size) ; grid_center_lon:units = "degrees" ; grid_center_lon:bounds = "grid_corner_lon" ; int grid_imask(grid_size) ; grid_imask:units = "unitless" ; grid_imask:coordinates = "grid_center_lon grid_center_lat" ; double grid_corner_lat(grid_size, grid_corners) ; grid_corner_lat:units = "degrees" ; double grid_corner_lon(grid_size, grid_corners) ; grid_corner_lon:units = "degrees" ; // global attributes: :title = "grob3s" ; }

1.5.2.4. CDO grids

All supported grids can also be described with the CDO grid description. The following keywords can be used to describe a grid:

| Keyword | Datatype | Description |

| gridtype | STRING | Type of the grid (gaussian, lonlat, curvilinear, unstructured). |

| gridsize | INTEGER | Size of the grid. |

| xsize | INTEGER | Size in x direction (number of longitudes). |

| ysize | INTEGER | Size in y direction (number of latitudes). |

| xvals | FLOAT ARRAY | X values of the grid cell center. |

| yvals | FLOAT ARRAY | Y values of the grid cell center. |

| nvertex | INTEGER | Number of the vertices for all grid cells. |

| xbounds | FLOAT ARRAY | X bounds of each gridbox. |

| ybounds | FLOAT ARRAY | Y bounds of each gridbox. |

| xfirst, xinc | FLOAT, FLOAT | Macros to define xvals with a constant increment, |

| xfirst is the x value of the first grid cell center. | ||

| yfirst, yinc | FLOAT, FLOAT | Macros to define yvals with a constant increment, |

| yfirst is the y value of the first grid cell center. | ||

| xunits | STRING | units of the x axis |

| yunits | STRING | units of the y axis |

Which keywords are necessary depends on the gridtype. The following table gives an overview of the default values or the size with respect to the different grid types.

| gridtype | lonlat | gaussian | projection | curvilinear | unstructured |

| gridsize | xsize*ysize | xsize*ysize | xsize*ysize | xsize*ysize | ncell |

| xsize | nlon | nlon | nx | nlon | gridsize |

| ysize | nlat | nlat | ny | nlat | gridsize |

| xvals | xsize | xsize | xsize | gridsize | gridsize |

| yvals | ysize | ysize | ysize | gridsize | gridsize |

| nvertex | 2 | 2 | 2 | 4 | nv |

| xbounds | 2*xsize | 2*xsize | 2*xsize | 4*gridsize | nv*gridsize |

| ybounds | 2*ysize | 2*ysize | 2*xsize | 4*gridsize | nv*gridsize |

| xunits | degrees | degrees | m | degrees | degrees |

| yunits | degrees | degrees | m | degrees | degrees |

The keywords nvertex, xbounds and ybounds are optional if area weights are not needed. The grid cell corners xbounds and ybounds have to rotate counterclockwise.

CDO grid description example of a T21 gaussian grid:

gridtype = gaussian xsize = 64 ysize = 32 xfirst = 0 xinc = 5.625 yvals = 85.76 80.27 74.75 69.21 63.68 58.14 52.61 47.07 41.53 36.00 30.46 24.92 19.38 13.84 8.31 2.77 -2.77 -8.31 -13.84 -19.38 -24.92 -30.46 -36.00 -41.53 -47.07 -52.61 -58.14 -63.68 -69.21 -74.75 -80.27 -85.76

CDO grid description example of a global regular grid with 60x30 points:

gridtype = lonlat xsize = 60 ysize = 30 xfirst = -177 xinc = 6 yfirst = -87 yinc = 6

The description for a projection is somewhat more complicated. Use the first section to describe the coordinates of the projection with the above keywords. Add the keyword grid_mapping_name to descibe the mapping between the given coordinates and the true latitude and longitude coordinates. grid_mapping_name takes a string value that contains the name of the projection. A list of attributes can be added to define the mapping. The name of the attributes depend on the projection. The valid names of the projection and there attributes follow the NetCDF CF-Convention.

CDO supports the special grid mapping attribute proj_params. These parameter will be passed directly to the PROJ library to generate the geographic coordinates if needed.

The geographic coordinates of the following projections can be generated without the attribute proj_params, if all other attributes are available:

-

rotated_latitude_longitude

-

lambert_conformal_conic

-

lambert_azimuthal_equal_area

-

sinusoidal

-

polar_stereographic

It is recommend to set the attribute proj_params also for the above projections to make sure all PROJ parameter are set correctly.

Here is an example of a CDO grid description using the attribute proj_params to define the PROJ parameter of a polar stereographic projection:

gridtype = projection xsize = 11 ysize = 11 xunits = "meter" yunits = "meter" xfirst = -638000 xinc = 150 yfirst = -3349350 yinc = 150 grid_mapping = crs grid_mapping_name = polar_stereographic proj_params = "+proj=stere +lon_0=-45 +lat_ts=70 +lat_0=90 +x_0=0 +y_0=0"

The result is the same as using the CF conform Grid Mapping Attributes:

gridtype = projection xsize = 11 ysize = 11 xunits = "meter" yunits = "meter" xfirst = -638000 xinc = 150 yfirst = -3349350 yinc = 150 grid_mapping = crs grid_mapping_name = polar_stereographic straight_vertical_longitude_from_pole = -45. standard_parallel = 70. latitude_of_projection_origin = 90. false_easting = 0. false_northing = 0.

CDO grid description example of a regional rotated lon/lat grid:

gridtype = projection xsize = 81 ysize = 91 xunits = "degrees" yunits = "degrees" xfirst = -19.5 xinc = 0.5 yfirst = -25.0 yinc = 0.5 grid_mapping_name = rotated_latitude_longitude grid_north_pole_longitude = -170 grid_north_pole_latitude = 32.5

Example CDO descriptions of a curvilinear and an unstructured grid can be found in Appendix D.

1.5.3 ICON - Grid File Server

The geographic coordinates of the ICON model are located on an unstructured grid. This grid is stored in a separate grid file independent of the model data. The grid files are made available to the general public via a file server. Furthermore, these grid files are located at DKRZ under /pool/data/ICON/grids.

With the CDO function setgrid,<gridfile> this grid information can be added to the data if needed. Here is an example:

cdo sellonlatbox,-20,60,10,70 -setgrid,<path_to_gridfile> icondatafile result

ICON model data in NetCDF format contains the global attribute grid_file_uri. This attribute contains a link to the appropriate grid file on the ICON grid file server. If the global attribute grid_file_uri is present and valid, the grid information can be added automatically. The setgrid function is then no longer required. The environment variable CDO_DOWNLOAD_PATH can be used to select a directory for storing the grid file. If this environment variable is set, the grid file will be automatically downloaded from the grid file server to this directory if needed. If the grid file already exists in the current directory, the environment variable does not need to be set.

If the grid files are available locally, like at DKRZ, they do not need to be fetched from the grid file server. Use the environment variable CDO_ICON_GRIDS to set the root directory of the ICON grids. Here is an example for the ICON grids at DKRZ:

CDO_ICON_GRIDS=/pool/data/ICON

1.6 Z-axis description

Sometimes it is necessary to change the description of a z-axis. This can be done with the operator setzaxis. This operator needs an ASCII formatted file with the description of the z-axis. The following keywords can be used to describe a z-axis:

| Keyword | Datatype | Description |

| zaxistype | STRING | type of the z-axis |

| size | INTEGER | number of levels |

| levels | FLOAT ARRAY | values of the levels |

| lbounds | FLOAT ARRAY | lower level bounds |

| ubounds | FLOAT ARRAY | upper level bounds |

| vctsize | INTEGER | number of vertical coordinate parameters |

| vct | FLOAT ARRAY | vertical coordinate table |

The keywords lbounds and ubounds are optional. vctsize and vct are only necessary to define hybrid model levels.

Available z-axis types:

| Z-axis type | Description | Units |

| surface | Surface | |

| pressure | Pressure level | pascal |

| hybrid | Hybrid model level | |

| height | Height above ground | meter |

| depth_below_sea | Depth below sea level | meter |

| depth_below_land | Depth below land surface | centimeter |

| isentropic | Isentropic (theta) level | kelvin |

Z-axis description example for pressure levels 100, 200, 500, 850 and 1000 hPa:

zaxistype = pressure size = 5 levels = 10000 20000 50000 85000 100000

Z-axis description example for ECHAM5 L19 hybrid model levels:

zaxistype = hybrid size = 19 levels = 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 vctsize = 40 vct = 0 2000 4000 6046.10938 8267.92578 10609.5117 12851.1016 14698.5 15861.125 16116.2383 15356.9258 13621.4609 11101.5625 8127.14453 5125.14062 2549.96875 783.195068 0 0 0 0 0 0 0.000338993268 0.00335718691 0.0130700432 0.0340771675 0.0706498027 0.12591666 0.201195419 0.295519829 0.405408859 0.524931908 0.646107674 0.759697914 0.856437683 0.928747177 0.972985268 0.992281914 1

Note that the vctsize is twice the number of levels plus two and the vertical coordinate table must be specified for the level interfaces.

1.7 Time axis

A time axis describes the time for every timestep. Two time axis types are available: absolute time and relative time axis. CDO tries to maintain the actual type of the time axis for all operators.

1.7.1 Absolute time

An absolute time axis has the current time to each time step. It can be used without knowledge of the calendar. This is preferably used by climate models. In NetCDF files the absolute time axis is represented by the unit of the time: "day as %Y%m%d.%f".

1.7.2 Relative time

A relative time is the time relative to a fixed reference time. The current time results from the reference time and the elapsed interval. The result depends on the calendar used. CDO supports the standard Gregorian, proleptic Gregorian, 360 days, 365 days and 366 days calendars. The relative time axis is preferably used by numerical weather prediction models. In NetCDF files the relative time axis is represented by the unit of the time: "time-units since reference-time", e.g "days since 1989-6-15 12:00".

1.7.3 Conversion of the time

Some programs which work with NetCDF data can only process relative time axes. Therefore it may be necessary to convert from an absolute into a relative time axis. This conversion can be done for each operator with the CDO option ’-r’. To convert a relative into an absolute time axis use the CDO option ’-a’.

1.8 Parameter table

A parameter table is an ASCII formated file to convert code numbers to variable names. Each variable has one line with its code number, name and a description with optional units in a blank separated list. It can only be used for GRIB, SERVICE, EXTRA and IEG formated files. The CDO option ’-t <partab>’ sets the default parameter table for all input files. Use the operator ’setpartab’ to set the parameter table for a specific file.

Example of a CDO parameter table:

134 aps surface pressure [Pa] 141 sn snow depth [m] 147 ahfl latent heat flux [W/m**2] 172 slm land sea mask 175 albedo surface albedo 211 siced ice depth [m]

1.9 Missing values

Missing values are data points that are missing or invalid. Such data points are treated in a different way than valid data. Most CDO operators can handle missing values in a smart way. But if the missing value is within the range of valid data, it can lead to incorrect results. This applies to all arithmetic operations, but especially to logical operations when the missing value is 0 or 1.

The default missing value for GRIB, SERVICE, EXTRA and IEG files is -9.e33. The CDO option ’-m <missval>’ overwrites the default missing value. In NetCDF files the variable attribute ’_FillValue’ is used as a missing value. The operator ’setmissval’ can be used to set a new missing value.

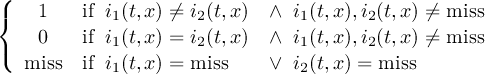

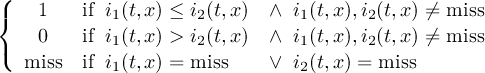

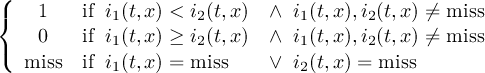

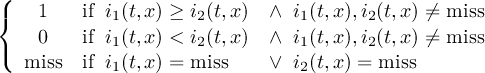

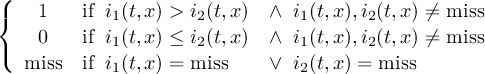

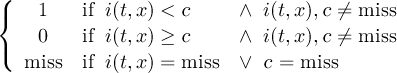

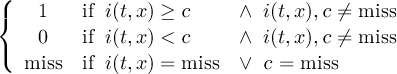

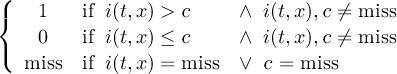

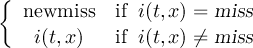

The CDO use of the missing value is shown in the following tables, where one table is printed for each operation. The operations are applied to arbitrary numbers a, b, the special case 0, and the missing value miss. For example the table named "addition" shows that the sum of an arbitrary number a and the missing value is the missing value, and the table named "multiplication" shows that 0 multiplied by missing value results in 0.

| addition | b | miss | |

| a | a + b | miss | |

| miss | miss | miss | |

| subtraction | b | miss | |

| a | a - b | miss | |

| miss | miss | miss | |

| multiplication | b | 0 | miss |

| a | a * b | 0 | miss |

| 0 | 0 | 0 | 0 |

| miss | miss | 0 | miss |

| division | b | 0 | miss |

| a | a∕b | miss | miss |

| 0 | 0 | miss | miss |

| miss | miss | miss | miss |

| maximum | b | miss | |

| a | max(a,b) | a | |

| miss | b | miss | |

| minimum | b | miss | |

| a | min(a,b) | a | |

| miss | b | miss | |

| sum | b | miss | |

| a | a + b | a | |

| miss | b | miss | |

The handling of missing values by the operations "minimum" and "maximum" may be surprising, but the definition given here is more consistent with that expected in practice. Mathematical functions (e.g. log, sqrt, etc.) return the missing value if an argument is the missing value or an argument is out of range.

All statistical functions ignore missing values, treading them as not belonging to the sample, with the side-effect of a reduced sample size.

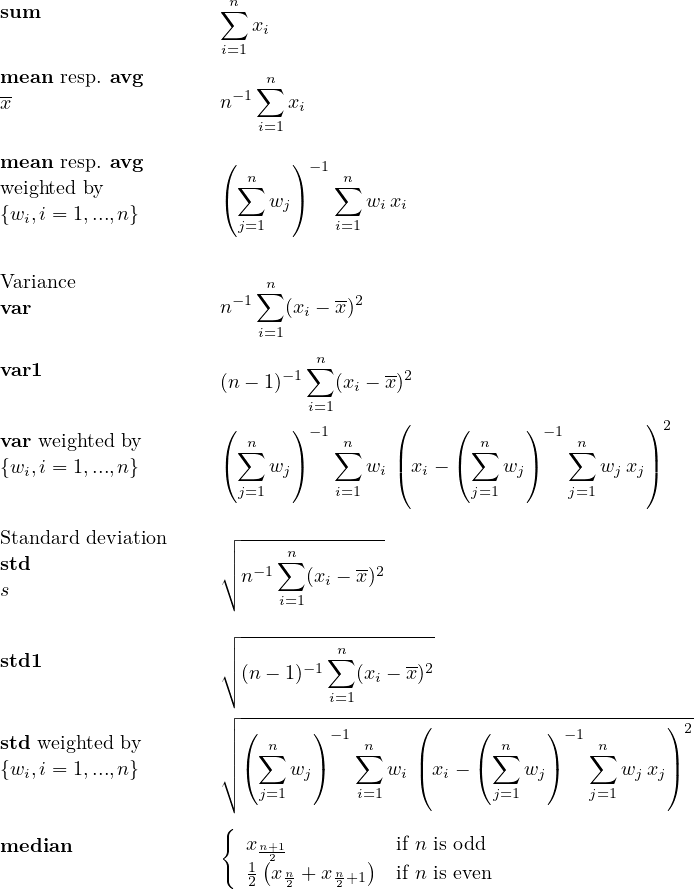

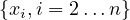

1.9.1 Mean and average

An artificial distinction is made between the notions mean and average. The mean is regarded as a statistical function, whereas the average is found simply by adding the sample members and dividing the result by the sample size. For example, the mean of 1, 2, miss and 3 is (1 + 2 + 3)∕3 = 2, whereas the average is (1 + 2 + miss + 3)∕4 = miss∕4 = miss. If there are no missing values in the sample, the average and mean are identical.

1.10 Percentile

There is no standard definition of percentile. All definitions yield to similar results when the number of values is very large. The following percentile methods are available in CDO:

| Percentile | |

| method |

Description |

| nrank | Nearest Rank method [default in CDO] |

| nist | The primary method recommended by NIST |

| rtype8 | R’s type=8 method |

| inverted_cdf | NumPy with percentile method=’inverted_cdf’ (R type=1) |

| averaged_inverted_cdf | NumPy with percentile method=’averaged_inverted_cdf’ (R type=2) |

| closest_observation | NumPy with percentile method=’closest_observation’ (R type=3) |

| interpolated_inverted_cdf | NumPy with percentile method=’interpolated_inverted_cdf’ (R type=4) |

| hazen | NumPy with percentile method=’hazen’ (R type=5) |

| weibull | NumPy with percentile method=’weibull’ (R type=6) |

| linear | NumPy with percentile method=’linear’ (R type=7) [default in NumPy and R] |

| median_unbiased | NumPy with percentile method=’median_unbiased’ (R type=8) |

| normal_unbiased | NumPy with percentile method=’normal_unbiased’ (R type=9) |

| lower | NumPy with percentile method=’lower’ |

| higher | NumPy with percentile method=’higher’ |

| midpoint | NumPy with percentile method=’midpoint’ |

| nearest | NumPy with percentile method=’nearest’ |

The percentile method can be selected with the CDO option --percentile. The Nearest Rank method is the default percentile method in CDO.

The different percentile methods can lead to different results, especially for small number of data values. Consider the ordered list {15, 20, 35, 40, 50, 55}, which contains six data values. Here is the result for the 30th, 40th, 50th, 75th and 100th percentiles of this list using the different percentile methods:

| Percentile | NumPy | NumPy | NumPy | NumPy | |||

| P | nrank | nist | rtype8 | linear | lower | higher | nearest |

| 30th | 20 | 21.5 | 23.5 | 27.5 | 20 | 35 | 35 |

| 40th | 35 | 32 | 33 | 35 | 35 | 35 | 35 |

| 50th | 35 | 37.5 | 37.5 | 37.5 | 35 | 40 | 40 |

| 75th | 50 | 51.25 | 50.42 | 47.5 | 40 | 50 | 50 |

| 100th | 55 | 55 | 55 | 55 | 55 | 55 | 55 |

1.10.1 Percentile over timesteps

The amount of data for time series can be very large. All data values need to held in memory to calculate the percentile. The percentile over timesteps uses a histogram algorithm, to limit the amount of required memory. The default number of histogram bins is 101. That means the histogram algorithm is used, when the dataset has more than 101 time steps. The default can be overridden by setting the environment variable CDO_PCTL_NBINS to a different value. The histogram algorithm is implemented only for the Nearest Rank method.

1.11 Regions

The CDO operators maskregion and selregion can be used to mask and select regions. For this purpose, the region needs to be defined by the user. In CDO there are two possibilities to define regions.

One possibility is to define the regions with an ASCII file. Each region is defined by a polygon. Each line of the polygon contains the longitude and latitude coordinates of a point. A description file for regions can contain several polygons, these must be separated by a line with the character &.

Here is a simple example of a polygon for a box with longitudes from 120W to 90E and latitudes from 20N to 20S:

120 20 120 -20 270 -20 270 20

With the second option, predefined regions can be used via country codes. A country is specified with dcw:<CountryCode>. Country codes can be combined with the plus sign.

The ISO two-letter country codes can be found on https://en.wikipedia.org/wiki/ISO_3166-1_alpha-2. To define a state, append the state code to the country code, e.g. USAK for Alaska. For the coordinates of a country CDO uses the DCW (Digital Chart of the World) dataset from GMT. This dataset must be installed on the system and the environment variable DIR_DCW must point to it.

2 Reference manual

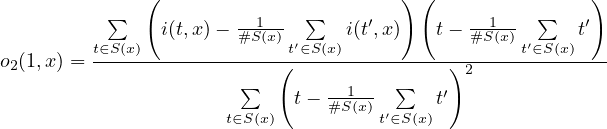

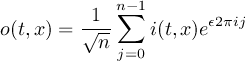

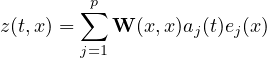

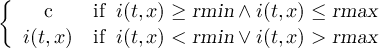

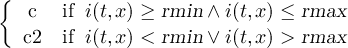

This section gives a description of all operators. Related operators are grouped to modules. For easier description all single input files are named infile or infile1, infile2, etc., and an arbitrary number of input files are named infiles. All output files are named outfile or outfile1, outfile2, etc. Further the following notion is introduced:

-

-

Timestep

of infile

of infile

-

-

Element number

of the field at timestep

of the field at timestep  of infile

of infile

-

-

Timestep

of outfile

of outfile

-

-

Element number

of the field at timestep

of the field at timestep  of outfile

of outfile

2.1 Information

This section contains modules to print information about datasets. All operators print there results to standard output.

Here is a short overview of all operators in this section:

| info | Dataset information listed by parameter identifier |

| infon | Dataset information listed by parameter name |

| cinfo | Compact information listed by parameter name |

| map | Dataset information and simple map |

| sinfo | Short information listed by parameter identifier |

| sinfon | Short information listed by parameter name |

| xsinfo | Extra short information listed by parameter name |

| xsinfop | Extra short information listed by parameter identifier |

| diff | Compare two datasets listed by parameter id |

| diffn | Compare two datasets listed by parameter name |

| npar | Number of parameters |

| nlevel | Number of levels |

| nyear | Number of years |

| nmon | Number of months |

| ndate | Number of dates |

| ntime | Number of timesteps |

| ngridpoints | Number of gridpoints |

| ngrids | Number of horizontal grids |

| showformat | Show file format |

| showcode | Show code numbers |

| showname | Show variable names |

| showstdname | Show standard names |

| showlevel | Show levels |

| showltype | Show GRIB level types |

| showyear | Show years |

| showmon | Show months |

| showdate | Show date information |

| showtime | Show time information |

| showtimestamp | Show timestamp |

| showchunkspec | Show chunk specification |

| showfilter | Show filter specification |

| showattribute | Show a global attribute or a variable attribute |

| partab | Parameter table |

| codetab | Parameter code table |

| griddes | Grid description |

| zaxisdes | Z-axis description |

| vct | Vertical coordinate table |

2.1.1 INFO - Information and simple statistics

Synopsis

<operator> infiles

Description

This module writes information about the structure and contents for each field of all input files to standard output. A field is a horizontal layer of a data variable. All input files need to have the same structure with the same variables on different timesteps. The information displayed depends on the chosen operator.

Operators

- info

-

Dataset information listed by parameter identifier

Prints information and simple statistics for each field of all input datasets. For each field the operator prints one line with the following elements:-

Date and Time

-

Level, Gridsize and number of Missing values

-

Minimum, Mean and Maximum

The mean value is computed without the use of area weights! -

Parameter identifier

-

- infon

-

Dataset information listed by parameter name

The same as operator info but using the name instead of the identifier to label the parameter. - cinfo

-

Compact information listed by parameter name

cinfo is a compact version of infon. It prints the minimum, mean and maximum value for each variable across all layers and time steps. - map

-

Dataset information and simple map

Prints information, simple statistics and a map for each field of all input datasets. The map will be printed only for fields on a regular lon/lat grid.

Example

To print information and simple statistics for each field of a dataset use:

cdo infon infile

This is an example result of a dataset with one 2D parameter over 12 timesteps:

-1 : Date Time Level Size Miss : Minimum Mean Maximum : Name 1 : 1987-01-31 12:00:00 0 2048 1361 : 232.77 266.65 305.31 : SST 2 : 1987-02-28 12:00:00 0 2048 1361 : 233.64 267.11 307.15 : SST 3 : 1987-03-31 12:00:00 0 2048 1361 : 225.31 267.52 307.67 : SST 4 : 1987-04-30 12:00:00 0 2048 1361 : 215.68 268.65 310.47 : SST 5 : 1987-05-31 12:00:00 0 2048 1361 : 215.78 271.53 312.49 : SST 6 : 1987-06-30 12:00:00 0 2048 1361 : 212.89 272.80 314.18 : SST 7 : 1987-07-31 12:00:00 0 2048 1361 : 209.52 274.29 316.34 : SST 8 : 1987-08-31 12:00:00 0 2048 1361 : 210.48 274.41 315.83 : SST 9 : 1987-09-30 12:00:00 0 2048 1361 : 210.48 272.37 312.86 : SST 10 : 1987-10-31 12:00:00 0 2048 1361 : 219.46 270.53 309.51 : SST 11 : 1987-11-30 12:00:00 0 2048 1361 : 230.98 269.85 308.61 : SST 12 : 1987-12-31 12:00:00 0 2048 1361 : 241.25 269.94 309.27 : SST

2.1.2 SINFO - Short information

Synopsis

<operator> infiles

Description

This module writes information about the structure of infiles to standard output. infiles is an arbitrary number of input files. All input files need to have the same structure with the same variables on different timesteps. The information displayed depends on the chosen operator.

Operators

- sinfo

-

Short information listed by parameter identifier

Prints short information of a dataset. The information is divided into 4 sections. Section 1 prints one line per parameter with the following information:-

institute and source

-

time c=constant v=varying

-

type of statistical processing

-

number of levels and z-axis number

-

horizontal grid size and number

-

data type

-

parameter identifier

Section 2 and 3 gives a short overview of all grid and vertical coordinates. And the last section contains short information of the time coordinate.

-

- sinfon

-

Short information listed by parameter name

The same as operator sinfo but using the name instead of the identifier to label the parameter.

Example

To print short information of a dataset use:

cdo sinfon infile

This is the result of an ECHAM5 dataset with 3 parameter over 12 timesteps:

-1 : Institut Source T Steptype Levels Num Points Num Dtype : Name 1 : MPIMET ECHAM5 c instant 1 1 2048 1 F32 : GEOSP 2 : MPIMET ECHAM5 v instant 4 2 2048 1 F32 : T 3 : MPIMET ECHAM5 v instant 1 1 2048 1 F32 : TSURF Grid coordinates : 1 : gaussian : points=2048 (64x32) F16 longitude : 0 to 354.375 by 5.625 degrees_east circular latitude : 85.7606 to -85.7606 degrees_north Vertical coordinates : 1 : surface : levels=1 2 : pressure : levels=4 level : 92500 to 20000 Pa Time coordinate : time : 12 steps YYYY-MM-DD hh:mm:ss YYYY-MM-DD hh:mm:ss YYYY-MM-DD hh:mm:ss YYYY-MM-DD hh:mm:ss 1987-01-31 12:00:00 1987-02-28 12:00:00 1987-03-31 12:00:00 1987-04-30 12:00:00 1987-05-31 12:00:00 1987-06-30 12:00:00 1987-07-31 12:00:00 1987-08-31 12:00:00 1987-09-30 12:00:00 1987-10-31 12:00:00 1987-11-30 12:00:00 1987-12-31 12:00:00

2.1.3 XSINFO - Extra short information

Synopsis

<operator> infiles

Description

This module writes information about the structure of infiles to standard output. infiles is an arbitrary number of input files. All input files need to have the same structure with the same variables on different timesteps. The information displayed depends on the chosen operator.

Operators

- xsinfo

-

Extra short information listed by parameter name

Prints short information of a dataset. The information is divided into 4 sections. Section 1 prints one line per parameter with the following information:-

institute and source

-

time c=constant v=varying

-

type of statistical processing

-

number of levels and z-axis number

-

horizontal grid size and number

-

data type

-

memory type (float or double)

-

parameter name

Section 2 to 4 gives a short overview of all grid, vertical and time coordinates.

-

- xsinfop

-

Extra short information listed by parameter identifier

The same as operator xsinfo but using the identifier instead of the name to label the parameter.

Example

To print extra short information of a dataset use:

cdo xsinfo infile

This is the result of an ECHAM5 dataset with 3 parameter over 12 timesteps:

-1 : Institut Source T Steptype Levels Num Points Num Dtype Mtype : Name 1 : MPIMET ECHAM5 c instant 1 1 2048 1 F32 F32 : GEOSP 2 : MPIMET ECHAM5 v instant 4 2 2048 1 F32 F32 : T 3 : MPIMET ECHAM5 v instant 1 1 2048 1 F32 F32 : TSURF Grid coordinates : 1 : gaussian : points=2048 (64x32) F16 longitude: 0 to 354.375 by 5.625 degrees_east circular latitude: 85.7606 to -85.7606 degrees_north Vertical coordinates : 1 : surface : levels=1 2 : pressure : levels=4 level: 92500 to 20000 Pa Time coordinate : steps: 12 time: 1987-01-31T18:00:00 to 1987-12-31T18:00:00 by 1 month units: days since 1987-01-01T00:00:00 calendar: proleptic_gregorian

2.1.4 DIFF - Compare two datasets field by field

Synopsis

<operator>[,parameter] infile1 infile2

Description

Compares the contents of two datasets field by field. The input datasets need to have the same structure and its fields need to have the dimensions. Try the option names if the number of variables differ. Exit status is 0 if inputs are the same and 1 if they differ.

Operators

- diff

-

Compare two datasets listed by parameter id

Provides statistics on differences between two datasets. For each pair of fields the operator prints one line with the following information:-

Date and Time

-

Level, Gridsize and number of Missing values

-

Number of different values

-

Occurrence of coefficient pairs with different signs (S)

-

Occurrence of zero values (Z)

-

Maxima of absolute difference of coefficient pairs

-

Maxima of relative difference of non-zero coefficient pairs with equal signs

-

Parameter identifier

-

- diffn

-

Compare two datasets listed by parameter name

The same as operator diff. Using the name instead of the identifier to label the parameter.

Parameter

- maxcount

-

INTEGER Stop after maxcount different fields

- abslim

-

FLOAT Limit of the maximum absolute difference (default: 0)

- rellim

-

FLOAT Limit of the maximum relative difference (default: 1)

- names

-

STRING Consideration of the variable names of only one input file (left/right) or the intersection of both (intersect).

Example

To print the difference for each field of two datasets use:

cdo diffn infile1 infile2

This is an example result of two datasets with one 2D parameter over 12 timesteps:

Date Time Level Size Miss Diff : S Z Max_Absdiff Max_Reldiff : Name 1 : 1987-01-31 12:00:00 0 2048 1361 273 : F F 0.00010681 4.1660e-07 : SST 2 : 1987-02-28 12:00:00 0 2048 1361 309 : F F 6.1035e-05 2.3742e-07 : SST 3 : 1987-03-31 12:00:00 0 2048 1361 292 : F F 7.6294e-05 3.3784e-07 : SST 4 : 1987-04-30 12:00:00 0 2048 1361 183 : F F 7.6294e-05 3.5117e-07 : SST 5 : 1987-05-31 12:00:00 0 2048 1361 207 : F F 0.00010681 4.0307e-07 : SST 7 : 1987-07-31 12:00:00 0 2048 1361 317 : F F 9.1553e-05 3.5634e-07 : SST 8 : 1987-08-31 12:00:00 0 2048 1361 219 : F F 7.6294e-05 2.8849e-07 : SST 9 : 1987-09-30 12:00:00 0 2048 1361 188 : F F 7.6294e-05 3.6168e-07 : SST 10 : 1987-10-31 12:00:00 0 2048 1361 297 : F F 9.1553e-05 3.5001e-07 : SST 11 : 1987-11-30 12:00:00 0 2048 1361 234 : F F 6.1035e-05 2.3839e-07 : SST 12 : 1987-12-31 12:00:00 0 2048 1361 267 : F F 9.3553e-05 3.7624e-07 : SST 11 of 12 fields differ

2.1.5 NINFO - Print the number of parameters, levels or times

Synopsis

<operator> infile

Description

This module prints the number of variables, levels or times of the input dataset.

Operators

- npar

-

Number of parameters

Prints the number of parameters (variables). - nlevel

-

Number of levels

Prints the number of levels for each variable. - nyear

-

Number of years

Prints the number of different years. - nmon

-

Number of months

Prints the number of different combinations of years and months. - ndate

-

Number of dates

Prints the number of different dates. - ntime

-

Number of timesteps

Prints the number of timesteps. - ngridpoints

-

Number of gridpoints

Prints the number of gridpoints for each variable. - ngrids

-

Number of horizontal grids

Prints the number of horizontal grids.

Example

To print the number of parameters (variables) in a dataset use:

cdo npar infile

To print the number of months in a dataset use:

cdo nmon infile

2.1.6 SHOWINFO - Show variable information

Synopsis

<operator> infile

Description

This module prints meta-data information of all input variables. Depending on the chosen operator the name, level, date, time and other information is printed.

Operators

- showformat

-

Show file format

Prints the file format of the input dataset. - showcode

-

Show code numbers

Prints the code number of all variables. - showname

-

Show variable names

Prints the name of all variables. - showstdname

-

Show standard names

Prints the standard name of all variables. - showlevel

-

Show levels

Prints all levels for each variable. - showltype

-

Show GRIB level types

Prints the GRIB level type for all z-axes. - showyear

-

Show years

Prints all years. - showmon

-

Show months

Prints all months. - showdate

-

Show date information

Prints date information of all timesteps (format YYYY-MM-DD). - showtime

-

Show time information

Prints time information of all timesteps (format hh:mm:ss). - showtimestamp

-

Show timestamp

Prints timestamp of all timesteps (format YYYY-MM-DDThh:mm:ss). - showchunkspec

-

Show chunk specification

Prints NetCDF4 chunk specification of all variables. - showfilter

-

Show filter specification

Prints NetCDF4 filter specification of all variables.

Example

To print the code number of all variables in a dataset use:

cdo showcode infile

This is an example result of a dataset with three variables:

129 130 139

To print all months in a dataset use:

cdo showmon infile

This is an examples result of a dataset with an annual cycle:

1 2 3 4 5 6 7 8 9 10 11 12

2.1.7 SHOWATTRIBUTE - Show attributes

Synopsis

showattribute[,attributes] infile

Description

This operator prints the attributes of the data variables of a dataset.

Each attribute has the following structure:

[var_nm@][att_nm]

- var_nm

-

Variable name (optional). Example: pressure

- att_nm

-

Attribute name (optional). Example: units

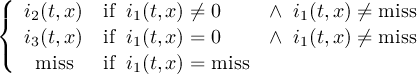

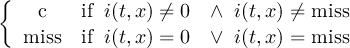

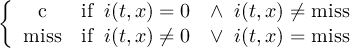

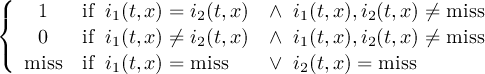

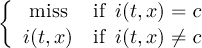

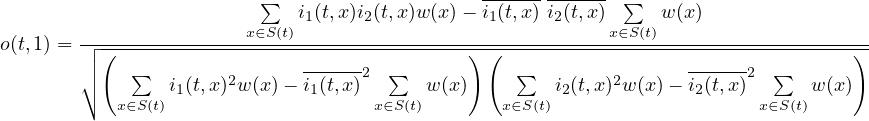

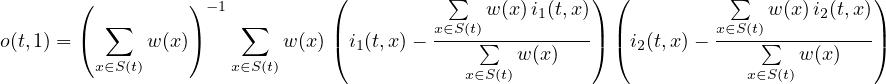

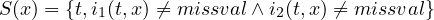

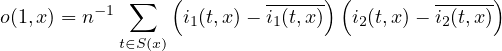

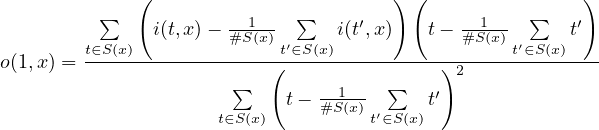

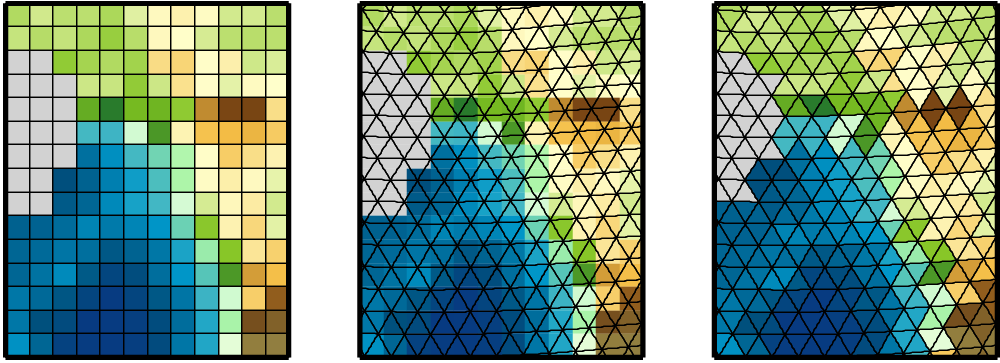

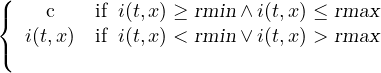

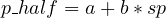

The value of var_nm is the name of the variable containing the attribute (named att_nm) that you want to print. Use wildcards to print the attribute att_nm of more than one variable. A value of var_nm of ’*’ will print the attribute att_nm of all data variables. If var_nm is missing then att_nm refers to a global attribute.