hourly anomalies calculation

Added by Ella E over 6 years ago

Hi,

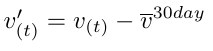

I would like to calculate hourly anomalies out of 3 hour data that has 4 dimensions (time, pressure height, latitude, longitude), I must subtract this data from its monthly mean, please see the attached picture for the equation that I am using to calculate the hourly anomalies.

| eddieseq.png (3.45 KB) eddieseq.png |

Replies (11)

RE: hourly anomalies calculation - Added by Ralf Mueller over 6 years ago

hi!

cdo -sub <ifile> -monmean <ifile> <ofile>for using a real month instead of a 30-day-month.

hth

ralf

RE: hourly anomalies calculation - Added by Ella E over 6 years ago

Hi,

thank you for your reply. I have tried it, but I got the following error message:

cdo(2) monmean: Process started

cdo sub: Filling up stream2 >monmean< by copying all timesteps.

cdo(2) monmean: Processed 2707292160 values from 1 variable over 8640 timesteps

Error (pipeInqTimestep): (pipe1.2) unexpected tsID 0 37 35

RE: hourly anomalies calculation - Added by Ralf Mueller over 6 years ago

Then I think I need access to your input file. Can yo upload it?

RE: hourly anomalies calculation - Added by Ella E over 6 years ago

Hi Ralf,

The data file is big to be uploaded here. I attached a wget script file to be able to download the input file.

use:

chmod 700 wget-20190320154657.sh

./wget-20190320154657.sh

| wget-20190320154657.sh (32.7 KB) wget-20190320154657.sh |

RE: hourly anomalies calculation - Added by Ralf Mueller over 6 years ago

it only works with ESGF credentials. But you can use MPI's public ftp server: ftp.zmaw.de/incoming, user anonymous, passwd: an email-address

RE: hourly anomalies calculation - Added by Ella E over 6 years ago

Hi Ralf,

I don't know how to access the ftp server, I tried the link you posted, but it doesn't work for me.

RE: hourly anomalies calculation - Added by Ralf Mueller over 6 years ago

What about uploading only a single variable or a single level? What about horizontal interpolation to a very coarse grid? - This problem seem to be time-axis-related, so a single grid point might be enough to reproduce your results

RE: hourly anomalies calculation - Added by Ralf Mueller about 6 years ago

Alright Ella, here is my solution: (I created an esgf account for my to get the read data)

- split the file into monthly files: this is done by splitsel, because all your month have the same number of timesteps (360day-calendar)

- compute the mean value of all these files and duplicate the single timestep (the monthly mean) back to the 240 timesteps of a month

- concatenate all of the files

- subtract this result from the initial data file

ifile=va_A3hr_ECHAM61_aquaControlTRACMIP_r1i1p1_004301010000-004512302100.nc

cdo -v splitsel,240 $ifile $(basename $ifile .nc)_ # STEP 1

for monfile in $(basename $ifile .nc)_*; do

echo $monfile

cdo -duplicate,240 -monmean $monfile hourlyMonmean_$monfile # STEP 2

done

cdo -sub $ifile -cat [ hourlyMonmean_*nc ] subfile.nc # STEP 3 and 4

RE: hourly anomalies calculation - Added by Ella E about 6 years ago

Hi Ralph,

Thank you very much. Your code is working and doing what it supposed to do, except that I have an error on the sub line:

cdo sub (Abort): too many streams! Operator need 2 input and 1 output streams.

I am currently using cdo-1.6.8rc3 (may be this is causing the sub line from working). So, I break the cdo sub line, first cat all the hourlyMonmean, then I did the subtraction and it works

There is a lot for me to learn, thank you again.

RE: hourly anomalies calculation - Added by Ralf Mueller about 6 years ago

hi!

just update to cdo-1.9.6. then it should work